Creating Novel Reading Experiences

While writing and reading dates back to the 4th millennium BC, reading as a way of accessing information has evolved from a privilege reserved for an elite few to a skill practised by the majority of the population. Literacy levels have spiked to a point where today, more than 86% of the world’s population is considered literate. Reading techniques themselves have evolved from reading aloud to silent reading, and with the advent of the digital revolution, where, how, and what we read has significantly changed. The information age provides us with both opportunities and challenges, which change our reading behaviour. Various devices are now available for reading, and their mobility provides us with unprecedented opportunities to engage with text anytime, anywhere.

This research focuses on the challenges, best practices, and future directions of ubiquitous technologies to support reading activities. By developing new reading UI design principles for emerging technologies, deploying intelligent scheduling algorithms, and developing new measures for assessing the quality of reading sessions, we build systems that provide better readability, prioritise information gain over attention capture, and instil better reading habits in their users.

Talk

Here is a talk outlining our research entitled Reading in the 21st Century, which I recently gave at the Empathetic Computing Lab, remotely that is, in Auckland.

Projects

In current research, we look at mobile screens as well as mixed reality displays and utilises eye-tracking data and other sensors (e.g., IMU, touch, camera, infrared) that give us insights into the reader’s state-of-mind. The increasing number and sensitivity of mobile sensors make it possible to directly study reading behaviour in the wild. Further, mixed reality technologies allow readers to interact with text in novel ways. In virtual environments, reading surfaces, ambience, and dynamic content can be adjusted on-the-fly. Through a broad range of prototypes we build and investigate novel reading interfaces, which help us assess the effects on skim and in-depth reading.

Attention-Aware Interfaces and Adaptations

Readers exhibit different levels of concentration and cognitive capacity over the course of the day. During phases of low performance, the ability to concentrate is very limited, which negatively affects reading comprehension and memory. Reading applications and devices do not take these variations in performance into account and often overburden the user with information or exhibit a lack of stimulation.

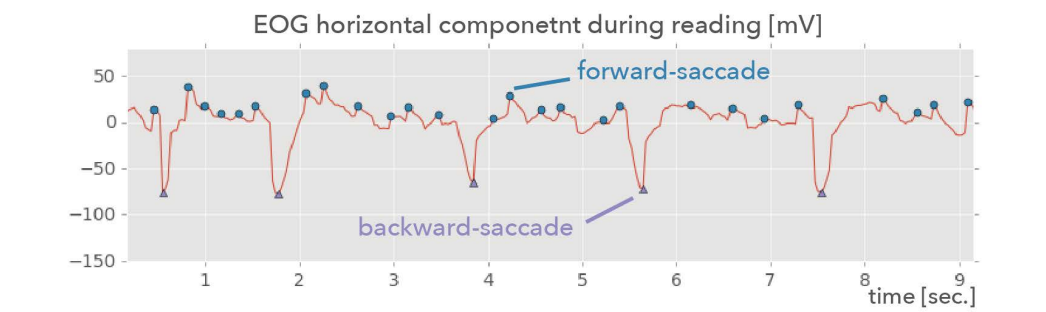

In this research strand, we utilize sensor data from eye trackers or electrooculography (EOG) to build a window into the reader’s mind and adjust the reading interface in real-time. With the use machine-learning we were able to detect episodes of mind-wandering (Brishtel, 2020), create proactive reading schedulers (Dingler, 2018) and investigate reading interventions (Ishimaru, 2018). Together with the German Research Center for Artificial Intelligence (DFKI) we instrument modern learning labs with sensors and attention-aware applications.

Readability Research

Together with ADOBE’s Document’s Intelligence Lab and a vast network of reasearchers, we have been looking at creating a comprehensive resource on readability. The resulting materials build a foundation for readability research and include a comprehensive framework for modern, multidisciplinary readability research (Beier et al., 2021).

Readability refers to aspects of visual information design which impact information flow from the page to the reader. Readability can be enhanced by changes to the set of typographical characteristics of a text. These aspects can be modified on-demand, instantly improving the ease with which a readercan process and derive meaning from text. We call on a multidisciplinary research community to take up these challenges to elevate reading outcomes and provide the tools to do so effectively.

Novel Reading Experiences in Mixed Reality

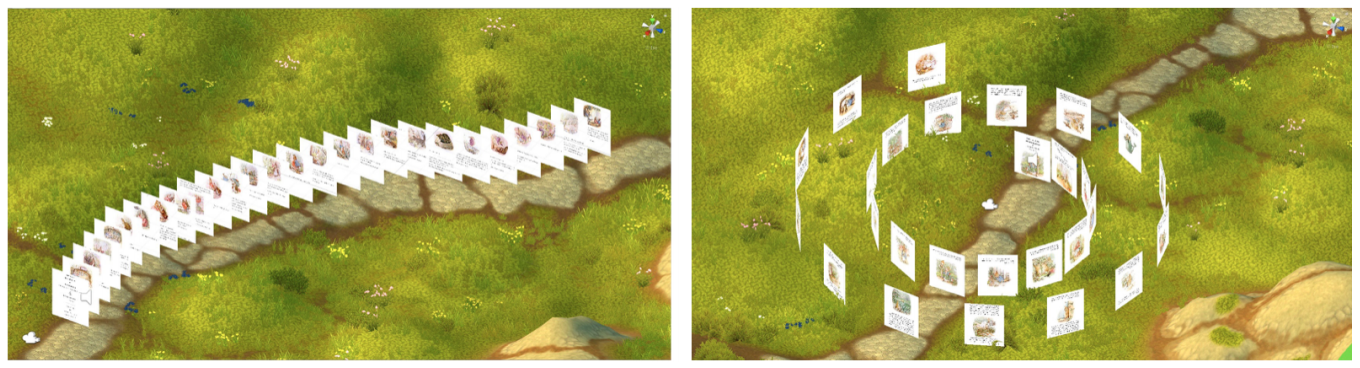

While text tends to lead a rather static life on paper pages and 2D screens, augmented and virtual reality allows the dynamic adaptation of content, presentation, and ambience in order to create immersive and novel reading experiences. In this research strand, we explore text and interaction characteristics of 3D reading interfaces to create immersive reading interfaces. In virtual reality (VR), where the reading surface is no longer confined to a 2D plane, our team investigates text renderings in 3D and the users’ ability to (literally) step into and navigate virtual books. Some commercial headsets, such as the FOVE VR, come with an in-built eye tracker that allows us to record user’s gaze in VR. A reading environment that is aware of the reader’s attention and text position can adjust the virtual environment on the fly. For example, background visuals and sounds could create a multimodal reading environment that changes along with the storyline hence creating an immersive reading experience.

So far, we have studied fundamental text characteristics in VR, such as the effect of angular height (i.e., text size), the user’s vergence distance, comfortable viewports and colours (Dingler, 2018). We further investigated the rendering of text on 3D objects with different surface warps (plane, concave, convex) and the effects on legibility and the reading experience (Wei, 2020). For orientation and navigation, we created different pagination techniques to lay out entire books in VR (Dingler, 2020). While VR allows for highly immersive environments, augmented reality (AR) enables readers to remain connected to the outside world and take the world’s context into consideration. AR interfaces overlay information on top of the real world by means of see-through devices. Text can be anchored in real environments and be adjusted in relation to the objects of the environment. In our past work, we created a set of consistent reading experiences across a multitude of devices, including smartphones, watch interfaces, and heads-up displays (Dingler, 2018 and Rzayev, 2018). We propose to build on those fundamentals and integrate them into a set of novel reading interfaces for both VR (immersive books) and AR (in co-existence of text and the environment). These mixed reality technologies will allow authors to create new text interactions and reading experiences. Reading surfaces, ambience, and dynamic content is adjustable in real-time and can be tailored to where the reader’s attention goes. The outcome of this research strand is the design and implementation of a series of AR/VR applications that adjust the reading experience to users and their environment, real or virtual.

RSVP Reading: Rapid Serial Visual Representation

Limited screen size poses a challenge for reading User Interfaces (UIs), which has been subject to previous investigations: reading performance on mobile devices generally increases with bigger font size, even more so it affects the readers’ subjective preference and lowers levels of perceived difficulty. Rapid Serial Visual Presentation (RSVP) has been suggested for usage on mobile devices and wearables in order to trade space for time. As smartwatches with an even more limited screen real estate become more prevalent, this technique has also gained commercial interest. Text can be displayed larger and of higher resolution as only a single word needs to fit on the display at a time. We have built a series of RSVP reading interfaces for smartwatches as well as head-mounted displays (Dingler, 2018) to investigate the potential of the technique for speed reading (Dingler, 2015) but also at-a-glance information intake (Dingler, 2016).

Text Priming and Interruptions

As reading on mobile devices is becoming more ubiquitous, content is consumed in shorter intervals and is punctuated by frequent interruptions. We explore reading applications that make use of the Priming Effect to boost memory and comprehension through summaries, highlights, and text visualisations (Angerbauer, 2015, Dingler, 2017, Kern, 2019) as well as mitigate the effects of reading interruptions (Srivastava, 2021).

Relevant Publications

[1] Angerbauer, Katrin, Tilman Dingler, Dagmar Kern, and Albrecht Schmidt. “Utilizing the effects of priming to facilitate text comprehension.” In Proceedings of the 33rd Annual ACM Conference Extended Abstracts on Human Factors in Computing Systems, pp. 1043-1048. 2015.

[2] Beier, Sofie, Sam Berlow, Esat Boucaud, Zoya Bylinskii, Tianyuan Cai, Jenae Cohn, Kathy Crowley et al. “Readability Research: An Interdisciplinary Approach.” arXiv preprint arXiv:2107.09615 (2021).

[3] Brishtel, Iuliia, Anam Ahmad Khan, Thomas Schmidt, Tilman Dingler, Shoya Ishimaru, and Andreas Dengel. “Mind Wandering in a Multimodal Reading Setting: Behavior Analysis & Automatic Detection Using Eye-Tracking and an EDA Sensor.” Sensors 20, no. 9 (2020): 2546.

[4] Dingler, Tilman, Passant EL Agroudy, Gerd Matheis, and Albrecht Schmidt. “Reading-based screenshot summaries for supporting awareness of desktop activities.” In Proceedings of the 7th Augmented Human International Conference 2016, pp. 1-5. 2016.

[5] Dingler, Tilman, Dagmar Kern, Katrin Angerbauer, and Albrecht Schmidt. “Text Priming-Effects of Text Visualizations on Readers Prior to Reading.” In IFIP Conference on Human-Computer Interaction, pp. 345-365. Springer, Cham, 2017.

[6] Dingler, Tilman, Kai Kunze, and Benjamin Outram. “Vr reading uis: Assessing text parameters for reading in vr.” In Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, pp. 1-6. 2018.

[7] Dingler, Tilman, Siran Li, Niels van Berkel, and Vassilis Kostakos. “Page-Turning Techniques for Reading Interfaces in Virtual Environments.” In 32nd Australian Conference on Human-Computer Interaction, pp. 454-461. 2020.

[8] Dingler, Tilman, Rufat Rzayev, Valentin Schwind, and Niels Henze. “RSVP on the go: implicit reading support on smart watches through eye tracking.” In Proceedings of the 2016 ACM International Symposium on Wearable Computers, pp. 116-119. 2016.

[9] Dingler, Tilman, Rufat Rzayev, Alireza Sahami Shirazi, and Niels Henze. “Designing consistent gestures across device types: Eliciting RSVP controls for phone, watch, and glasses.” In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, pp. 1-12. 2018.

[10] Dingler, Tilman, Alireza Sahami Shirazi, Kai Kunze, and Albrecht Schmidt. “Assessment of stimuli for supporting speed reading on electronic devices.” In Proceedings of the 6th Augmented Human International Conference, pp. 117-124. 2015.

[11] Dingler, Tilman, Benjamin Tag, Sabrina Lehrer, and Albrecht Schmidt. “Reading scheduler: proactive recommendations to help users cope with their daily reading volume.” In Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia, pp. 239-244. 2018.

[12] Ishimaru, Shoya, Tilman Dingler, Kai Kunze, Koichi Kise, and Andreas Dengel. “Reading interventions: Tracking reading state and designing interventions.” In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing: Adjunct, pp. 1759-1764. 2016.

[13] Kern, Dagmar, Daniel Hienert, Katrin Angerbauer, Tilman Dingler, and Pia Borlund. “Lessons Learned from Users Reading Highlighted Abstracts in a Digital Library.” In Proceedings of the 2019 Conference on Human Information Interaction and Retrieval, pp. 271-275. 2019.

[14] Rzayev, Rufat, Paweł W. Woźniak, Tilman Dingler, and Niels Henze. “Reading on smart glasses: The effect of text position, presentation type and walking.” In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, pp. 1-9. 2018.

[15] Sanchez, Susana, Tilman Dingler, Heng Gu, and Kai Kunze. “Embodied Reading: A Multisensory Experience.” In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, pp. 1459-1466. 2016.

[16] Srivastava, Namrata, Rajiv Jain, Jennifer Healey, Zoya Bylinskii, and Tilman Dingler. “Mitigating the Effects of Reading Interruptions by Providing Reviews and Previews.” In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, pp. 1-6. 2021.

[17] Wei, Chunxue, Difeng Yu, and Tilman Dingler. “Reading on 3D Surfaces in Virtual Environments.” In 2020 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), pp. 721-728. IEEE, 2020.